While the availability of data is plentiful, the ability to deliver insights in real-time as opposed to historical data analysis is a key differentiator between traditional and modern staffing vendor management systems.

HR expert Tim Sackett explains:

“We don’t truly listen to what all the data is telling us. We gather all of the information. We get assessment scores, we get personality and cognitive measures, we do in-depth background screening, we perform behavioral-based interviews, we check the candidates’ professional references, we stalk their Facebook page, we check informal references the candidate doesn’t know about, we do just about anything to get as much knowledge as we can on a candidate. And then what happens? We make a decision based on a gut feel.”

Traditional recruitment techniques involve decisions rooted in emotions rather than data, often resulting in slow and cumbersome processes, excessive costs, and bad hires. Identifying these downsides, more businesses are moving towards evidence-based hiring where decisions are data-backed, not prediction-backed. The emergence of Big Data and its corresponding technologies within the staffing industry has vastly improved the accessibility and availability of data. Big Data provides businesses a warehouse of data to analyze along with wide analytic options. But, there’s still a lag in how that data is processed and put to use. Many companies that consider themselves to be data-driven too often are looking at historical data to prove a hypothesis rather than analyzing current data to uncover ongoing realities.

As Michael Skapinker observed in the Financial Times, “It is not just our biases that get in the way but that past performance cannot predict results.”

Real-Time Data-Driven Insights to Boost Contingent Program Success

The frequent market shifts and the extreme volatilities in the staffing industry make staffing suppliers susceptible to constant change. Consequently, this impacts their staffing capabilities, thereby affecting performance. Relying on historical data to drive your contingent program decisions not only risks you engaging with poorly performing suppliers, but also can lead to losses in time, money, and valuable resources. This is why real-time analytics and scorecards are key. They provide contingent workforce programs illumination rather than (just) support by helping businesses make timely data-backed decisions in addition to ensuring overall program health.

Here are some benefits of real-time analytics for contingent workforce programs.

1. Optimize Hiring and Control Costs

Every business strives to make cost-optimized decisions wherever possible and businesses that utilize data effectively can realize cost savings more effectively.

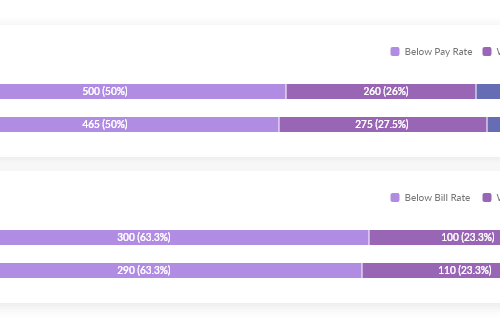

Using real-time data to compare market rates ensures the bill rates for your program are equitable for specific roles in specified locations. Having access to this information also ensures you don’t miss out on great talent by underpaying or go over budget due to excessive rates.

Additionally, real-time notifications for submittals above pay/bill rates help businesses promptly identify suppliers that are outside the rate guidelines, giving them more visibility and control over program spend.

The benefits don’t end here — real-time metrics also aid businesses in improving targeted hiring. By identifying the best sources of candidate inflow, businesses can optimize investments in the right channels to attract the best candidates.

2. Regulate Process Efficiencies

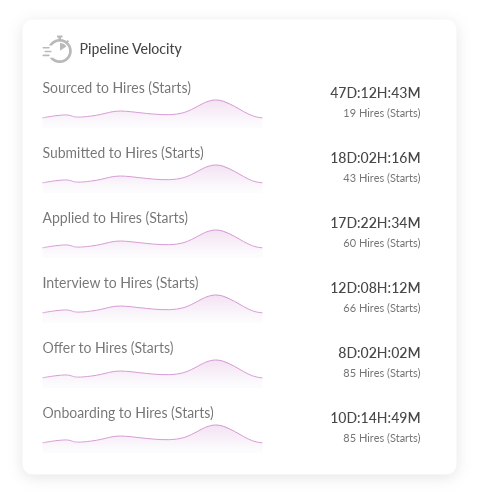

A real-time data-driven approach can proactively identify bottlenecks that are slowing down hiring and inform businesses on the right actions to take to fix them. For example, metrics like pipeline speed indicate the average time each candidate spends at every hiring stage. By actively monitoring this metric, businesses can avoid candidate stagnation occurring due to burdensome processes, sluggish review times, or recruiter inefficiencies.

3. Forecast Hiring

Accurate forecasting enables businesses to reduce costs, improve planning efforts and maximize efficiencies while ensuring they have the necessary resources to meet demands. Intending to realize this, businesses are starting to incorporate Data Science into their talent acquisition strategies. Real-time metrics act as enablers of predictive hiring, allowing businesses to make estimates on the hiring demand for specific job roles, especially high frequency or high volume roles. This requires a combination of a real-time and historical data view on your pipeline velocity, including metrics that reveal the average time it takes to generate a successful candidate at each hiring stage. Not only do these metrics help businesses in benchmarking their hiring, but also in improving visibility into their strategic workforce planning initiatives.

4. Improve Candidate Quality

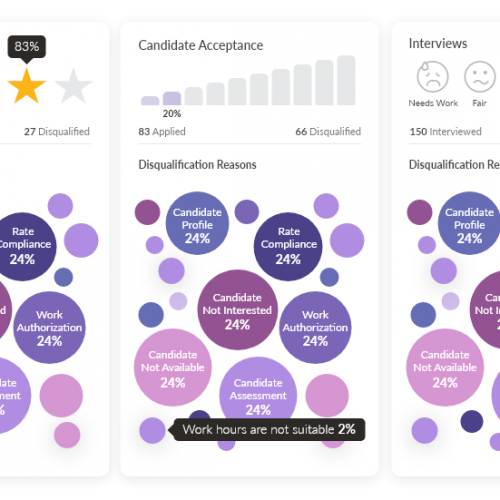

Aiming to truly assess hiring quality calls for businesses to look beyond the speed and cost of making hires. Instead, businesses should consider tracking other essential attributes that measure a candidate’s level of interest throughout the hiring process.

Real-time candidate dashboards give businesses a comprehensive view on candidate-centric information including candidate quality, acceptance rates, interview rates, and disqualification reasons. These data points help businesses understand candidate preferences and priorities in addition to evaluating candidate engagement and the overall interest in the business brand. By incorporating this information, businesses can improve their talent attraction and engagement efforts, leading to a healthier pipeline of candidates to hire.

Data-driven hiring hasn’t always been an easy concept to implement – disparate data sources, metrics, and reporting timelines all contribute to lag, confusion, and barriers to making intelligence-based decisions. Prosperix’s end-to-end workforce solutions (Vendor Management System, Hiring Marketplace, Direct Sourcing, and On-Demand Talent Pools) all incorporate real-time analytics and provide data-driven intelligence and insights. Real-time data is also foundational for our AI/ML algorithms which distribute, manage and automate capabilities in our solutions, giving you extraordinary ability to build and manage a thriving contingent workforce program.

Schedule a demo with us today to power your hiring efforts using real-time data!